We know we want to find the values of w and b that correspond to the minimum of the cost function (marked with the red arrow).

The image below shows the horizontal axes representing the parameters ( w and b), while the cost function J( w, b) is represented on the vertical axes.

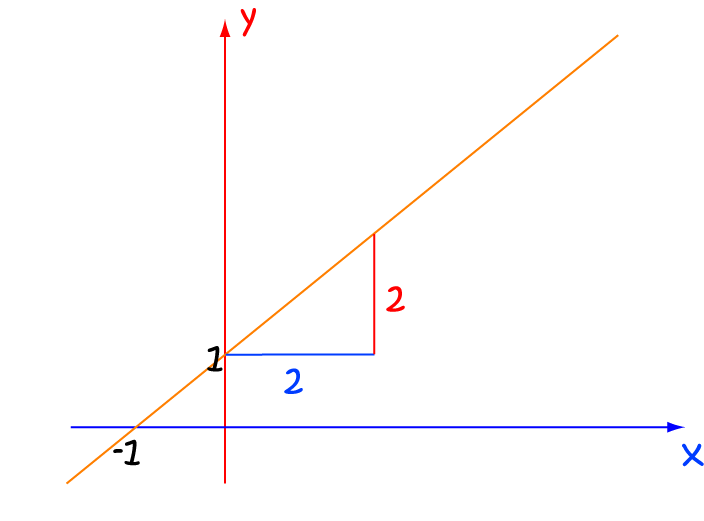

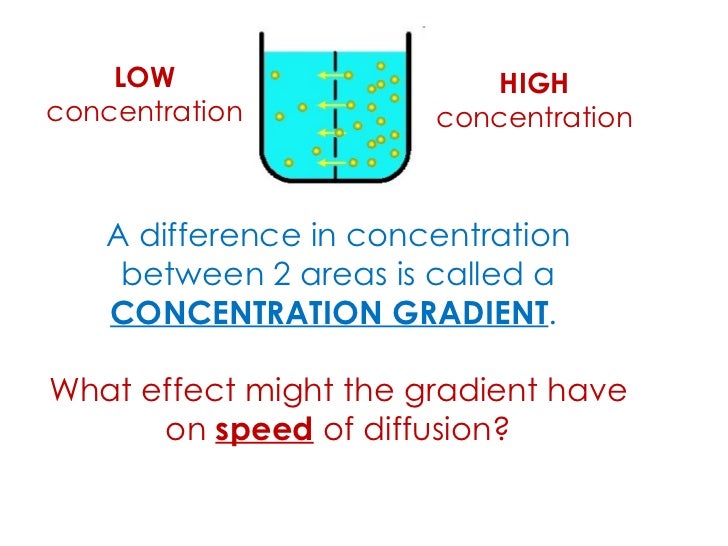

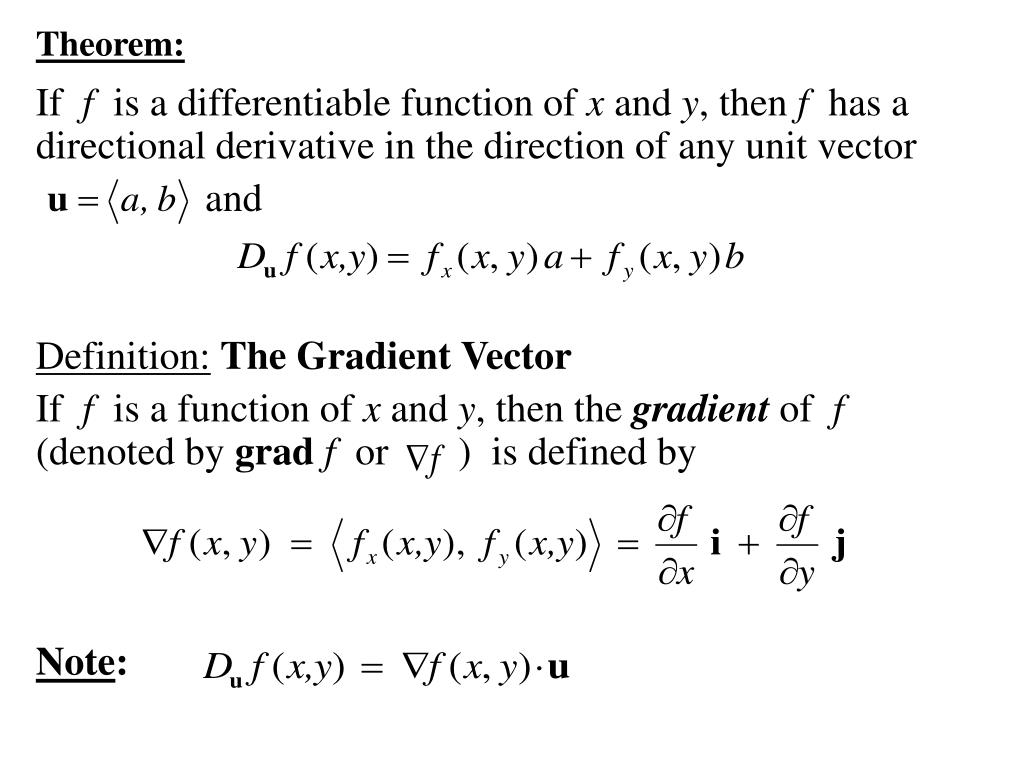

Imagine you have a machine learning problem and want to train your algorithm with gradient descent to minimize your cost-function J( w, b) and reach its local minimum by tweaking its parameters ( w and b). Let’s look at another example to really drive the concept home. So this formula basically tells us the next position we need to go, which is the direction of the steepest descent. The gamma in the middle is a waiting factor and the gradient term ( Δf(a) ) is simply the direction of the steepest descent. The minus sign refers to the minimization part of the gradient descent algorithm. The equation below describes what the gradient descent algorithm does: b is the next position of our climber, while a represents his current position. This is a better analogy because it is a minimization algorithm that minimizes a given function. Instead of climbing up a hill, think of gradient descent as hiking down to the bottom of a valley. Therefore a reduced gradient goes along with a reduced slope and a reduced step size for the hill climber. This perfectly represents the example of the hill because the hill is getting less steep the higher it’s climbed. This is because the steepness/slope of the hill, which determines the length of the vector, is less. Note that the gradient ranging from X0 to X1 is much longer than the one reaching from X3 to X4. Think of a gradient in this context as a vector that contains the direction of the steepest step the blindfolded man can take and also how long that step should be. Imagine the image below illustrates our hill from a top-down view and the red arrows are the steps of our climber. This process can be described mathematically using the gradient. As he comes closer to the top, however, his steps will get smaller and smaller to avoid overshooting it. He might start climbing the hill by taking really big steps in the steepest direction, which he can do as long as he is not close to the top. Imagine a blindfolded man who wants to climb to the top of a hill with the fewest steps along the way as possible. Known as the slope of a function in mathematical terms, the gradient simply measures the change in all weights with regard to the change in error. In machine learning, a gradient is a derivative of a function that has more than one input variable. In mathematical terms, a gradient is a partial derivative with respect to its inputs. But if the slope is zero, the model stops learning. The higher the gradient, the steeper the slope and the faster a model can learn. You can also think of a gradient as the slope of a function. "A gradient measures how much the output of a function changes if you change the inputs a little bit." - Lex Fridman (MIT)Ī gradient simply measures the change in all weights with regard to the change in error. To understand this concept fully, it’s important to know about gradients. You start by defining the initial parameter’s values and from there the gradient descent algorithm uses calculus to iteratively adjust the values so they minimize the given cost-function. Gradient descent in machine learning is simply used to find the values of a function's parameters (coefficients) that minimize a cost function as far as possible. To figuratively make the grade "be successful" is from 1912 early examples do not make clear whether the literal grade in mind was one of elevation, quality, or scholarship.Gradient Descent is an optimization algorithm for finding a local minimum of a differentiable function. Grade A "top quality, fit for human consumption" (originally of milk) is from a U.S. Meaning "class of things having the same quality or value" is from 1807 meaning "division of a school curriculum equivalent to one year" is from 1835 that of "letter-mark indicating assessment of a student's work" is from 1886 (earlier used of numerical grades). Meaning "inclination of a road or railroad" is from 1811. 1510s, "degree of measurement," from French grade "grade, degree" (16c.), from Latin gradus "a step, a pace, gait a step climbed (on a ladder or stair) " figuratively "a step toward something, a degree of something rising by stages," from gradi (past participle gressus) "to walk, step, go," from PIE root *ghredh- "to walk, go." It replaced Middle English gree "a step, degree in a series," from Old French grei "step," from Latin gradus.

0 kommentar(er)

0 kommentar(er)